Final Project - Goal-Oriented Action Planning

GPR440 Advanced AI

[ 5.2.21 ]

| Made With | Languages |

|---|---|

| Unity3D Rider |

C# |

Overview

The Project

My original intention of this project was to apply a Goal-Oriented Action Planning (GOAP) model in Unity/C# that is complex enough to demonstrate its value as well as performant enough that it does not impact overall performance when implemented into a very simple RTS. I thought this would also allow me to evaluate its usefulness as a utility AI.

Due to other projects and final semester antics, I was unable to get this far; however, I was able to get it working and gained insight from it. You can find my code here, but I would recommend using it more for inspiration, as I lacked the time necessary to refine it and it is purpose-built and may not fulfil your actual needs.

GOAP

GOAP is an AI approach that enables an agent to, as the name might suggest, create a plan out of a series of actions to accomplish a specific goal that it has been given. The power comes from the fact that once everything is set up, you can add new actions and goals with relative ease without having to make/maintain complex behaviors and transitions.

The other benefit is that GOAP allows a more dynamic AI that can respond to many scenarios in slightly different ways, allowing for a smarter AI with less long-term maintenance.

My Approach

General

A lot of GOAP sources say that GOAP requires 6 classes:

- An Agent Class: the actual agent

- An Action Class: an action that an agent can perform

- A Planner Class: creates a plan out of actions for an agent

- A State Machine: a finite state machine

- A State Class: a state in the FSM

- A GOAP Interface: interfaces between the FSM

I, however, cut out the last three. While it works and does simplify some things, it makes providing targets to actions to ensure they are reusable a little hokey. I would recommend doing something closer to the “full” approach described in the sources at the bottom, as it is much more thorough, and follows the suggestion of making the planner ‘general’ to make it easier to drag and drop agents and actions (which is the entire point of using GOAP).

Action

One of the building blocks of GOAP is the action. This is how an agent interacts with the world, and what makes up the plan it follows. I tracked preconditions and effects (as well as the status of an action) using enums.

public enum Effect

{

MAKE_ORE,

MAKE_FOOD,

MAKE_WEAPON,

DEPOSIT_ORE,

DEPOSIT_FOOD,

DEPOSIT_WEAPON,

MAKE_PERSON,

INCREASES_HUNGER,

DECREASES_HUNGER,

EQUIP_WEAPON,

ATTACKS_ENEMY,

SET_GOAL_BIAS,

DRAFT_AGENT,

EMPLOY_AGENT

}public enum Precondition

{

HAS_FOOD,

HAS_ORE,

HAS_WEAPON,

WEAPON_EQUIPED

}

public enum ActionStatus

{

FAILED,

WAITING,

COMPLETE

}The actual actions are built on top of an abstract class that tracks the basic requirements of all actions, with actual logic being added in the action classes you create. Of course, you need a way to hold the conditions and effects of your actions, the target (since most actions will require some location to target) and if you want/need: the time it takes to complete and the ‘cost’ for use in planning.

| GoapAction |

|

_preconditions : HashSet<Precondition> _effects : HashSet<Effect> _target : Transform _timeToComplete : float _cost : float |

|

PerformAction() : abstract ActionStatus SatisfiesPreconditions(HashSet<Effect> worldState) : abstract bool |

For functions, you need a function to handle what happens when you perform the action, and at least one function that can tell you if an action is able to be performed based on the world state. For example, an action to make a weapon requires the agent making the weapon to have ore in its inventory to make it with. An action that has no preconditions would just return true.

Action

The GOAP agent follows a similar approach to the actions. You start with a base abstract class that all other agents then inherit from to hold more specific functionality. The basic variable requirements are somewhere to hold the different goals the agent has, the current plan it is enacting, and all of the actions available to it.

| GoapAgent |

|

_goals : List<Goal> _plan : List<GoapAction> _availableActions : List<GoapAction> |

|

PerformCurrentAction() : abstract void CalcGoalRelevancy() : abstract HashSet<Effect> |

Expanding the Grid Class

public class Goal

{

public Effect goal;

public float bias;

public float relevance;

}For functions, you need a function that tries to perform the current action. Upon further review, in my implementation PerformCurrentAction() did not have varied functionality across agents, and did not need to be overloaded. However, if you want to have agents that handle performing actions in unique ways, then keep it abstract.

I also have a calculated goal relevance function. This goes through your goals, and finds the one that should have the highest priority based on the current world state. For example, a farmer may normally find “farming” as its most important goal until it is very hungry, at which point eating becomes the most relevant goal. Another note: the Goal class should also easily hold its own calculate relevancy function if different units should not differ on how they prioritize goals.

Captain Agent & Goal Bias

In the goal class, you will see a field called ‘bias.’ This can be used to either have an agent lean towards a particular goal at the base level, or be used by a superior agent to influence its decision-making. Part of my goal with this project was to implement a command and control hierarchy, where units propagate orders downwards, so that upper levels can focus on big-picture planning, and bottom-level ‘atomic’ units (meaning largely autonomous) can focus on carrying out small-picture tasks based on the high-level goals of the higher-level unit.

My project only contains a single captain and the rest of the atomic units. I wanted to have three tiers to divide out planning and consideration further but lacked the time. The captain looks at city resources, manpower and external threats to try to properly allocate people and resources, and in doing so will assign new units to become a soldier or laborer, and from there if they should focus on patrolling or defending if the unit is a soldier, or farming or mining if the unit is a laborer by setting goal bias’.

Planner

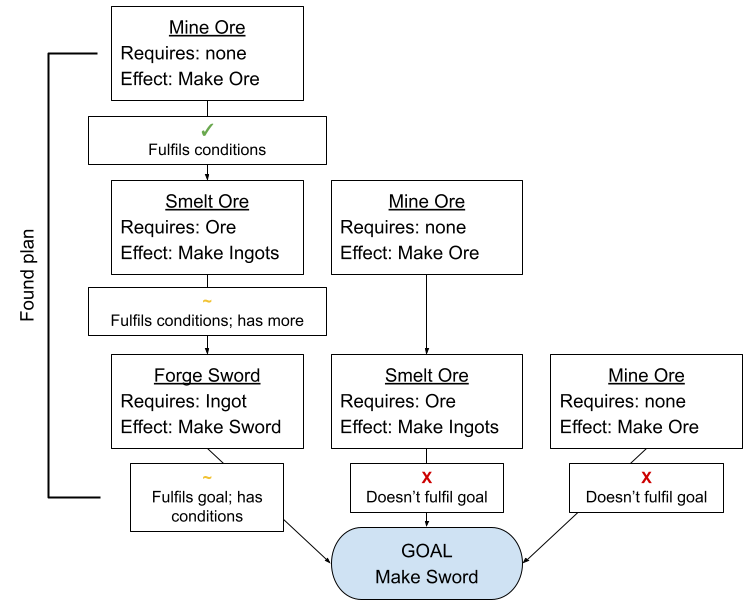

The planner is by far the hardest part to get right. The planner needs to be able to look at the actions available to the agent requesting a plan and its primary goal. From there, it builds out a graph, where nodes are world states, and actions are the edges that take you between them. It then uses this to find the best ‘path’ to the goal.

One approach is to work backwards: start with the desired world state, and find actions that will have the desired world state, then actions that satisfy any preconditions that action has that are not already fulfilled.

The approach I took was taken from this repo from Owens who wrote “Goal Oriented Action Planning for a Smarter AI” listed below. This approach is the forward approach, where the root node of your graph is the current world state and the remaining goals. It works recursively to build the graph.

// class for the nodes the planner uses

class GraphNode

{

public GraphNode parent; // parent node

public int costSoFar; // cost to get this far

public HashSet goalsLeft; // goals needing to be met

public HashSet state; // current effects active

public GoapAction action; // the action to get here

}

// … in ProcessPlanRequest() in Planner class

// build out the graph

GraphNode root = new GraphNode(null, 0, _currRequest.goals, initWorldState, null);

// convert list of actions available to agent to a hashset

HashSet availableActions = new HashSet(_currRequest.agent.AvailableActions);

bool success = BuildGraph(root, solutions, availableActions);

private bool BuildGraph(GraphNode parent,

List leaves,

HashSet

actionsLeft)

{

bool foundSolution = false;

// check thru all actions

foreach (GoapAction action in actionsLeft)

{

// check if action is currently usable

if (action.SatisfiesPreconditions(parent.state))

{

// create new state and apply action to it

HashSet currState = new HashSet(parent.state);

ApplyActionToWorldState(ref currState, action);

// remove completed goals

HashSet newGoalsLeft = new HashSet(parent.goalsLeft);

ApplyActionToGoals(ref newGoalsLeft, action);

// create new graph node

var actionInst = (GoapAction)Activator.CreateInstance(

action.GetType()

);

actionInst.Init(_currRequest.agent);

GraphNode node = new GraphNode(

parent,

parent.costSoFar++,

newGoalsLeft,

currState,

actionInst

);

// check if solution

if (newGoalsLeft.Count == 0)

{

// -> YES: new solution; add to leaves

leaves.Add(node);

foundSolution = true; // set true to break out

}

else

{

// -> NO: call build graph again on new node

// make a new list of available actions

HashSet lessActions = new HashSet(actionsLeft);

// remove the one just performed

lessActions.Remove(action);

// call build graph to try to find a leaf again

if (BuildGraph(node, leaves, lessActions))

foundSolution = true; // set true to break out

}

}

}

return foundSolution;

} This looks at the provided current world state, sees which actions can be used currently based on that world state, and applies them. When it does, it creates a new node and checks if the goal(s) provided have been completed. If a performable action completes all of the goals, you record that as a potential solution and break out (you have found an achievable plan to reach the goal).

If the action does not complete all of the goals, you call BuildGraph() on the new node, which performs the same logic again, but with the now-modified world state. This continues until all options have been exhausted.

If the initial call of BuildGraph() returns false, then you know there is no plan the agent can perform to complete the goal. From here, you can have the agent queue another request and have the planner try again later, have a back-up plan for it to run when it has no actual plan, and/or submit a new request with its next most-relevant goal (I did not do this).

My Thoughts On GOAP

Despite running out of time to achieve my initial goals, I do really like the possibilities that GOAP provides. If I wanted to create a game with a variety of complex units, I would have to spend a lot of time designing and refining their behaviors. While they would share functionality, additions to the behaviors would need to be individually introduced to each of the different unit varieties' brains.

GOAP, however, means that adding/removing actions and goals are about as simple as that, and the planner figures out how they fit in together. Editing the different units would just be adding/removing the action or goal from the lists at the top of the script.

While it definitely is not worth it if you only want simple or a single AI, it is if you want an agent that can literally plan out a series of actions to solve a problem while also having relatively easy expansion.

Also, on the note of utility AI, GOAP is essentially already a utility AI that has been given the reins of an actual agent. It is able to prioritize goals based on what is happening around it and even create a course of action. A lighter version of GOAP could easily be made and adapted to providing advice to a player.

Readings

The sources I used when researching and creating my implementation.

Chaudhari, Vedant. “Goal Oriented Action Planning.” Medium. Medium, 12 Dec. 2017. Web. 02 May 2021.

Millington, Ian, and John Funge. “Goal-Oriented Behavior.” Artificial Intelligence for Games. 2nd ed. London: Morgan Kaufmann, 2009. 401-26. Print.

Orkin, Jeff. “Agent Architecture Considerations for Real-Time Planning in Games.” American Association for Artificial Intelligence. 2004.

Owens, Brent. “Goal Oriented Action Planning for a Smarter AI.” Game Development Envato Tuts+. Envato Tuts, 23 Apr. 2014. Web. 02 May 2021.

Pittman, David L. “Practical Development Of Goal-Oriented Action Planning AI.” Thesis. The Guildhall at Southern Methodist University, 2007. Jorkin.com. MIT Media Lab. Web. 2 May 2021.

Thompson, Tommy. “Building the AI of F.E.A.R. with Goal Oriented Action Planning.” Gamasutra. Gamasutra, 7 May 2020. Web. 02 May 2021.